Strategy to Migrate Oracle Databases to Amazon RDS for Oracle Using AWS DMS Effectively

Today organizations of all sizes are planning their digital transformation to leverage Cloud to improve their business capabilities, operation efficiency, and customer experience. Cloud migration has been one of the essential factors in designing workloads to scale, highly available, and reliable with manageability, high performance, and cost-effectiveness. With AWS cloud, customers can migrate workloads successfully with various migration and modernization services.

One of the critical components of workload is databases. In AWS, customers very often leverage Amazon Relational Database Service (RDS) to run their database workloads, which is a fully managed relational database. For more information on Amazon RDS features, visit Amazon RDS Features.

There are various ways to migrate Oracle databases to Amazon RDS for Oracle, and the most commonly used options are AWS Database Migration Service (AWS DMS), Oracle Data Pump import/export tool, and Oracle Golden Gate.

AWS Database Migration Service (AWS DMS) helps customers to migrate databases to the AWS cloud. AWS DMS is a reliable, low-cost, simple-to-use service that quickly and securely migrates databases. It supports homogeneous migrations and heterogeneous migrations between different database platforms.

In this blog, I’ll share the strategy to migrate Oracle Databases to Amazon RDS for Oracle using AWS DMS. The source database is self-managed in the on-premises environment, and the target database is Amazon RDS for Oracle in the AWS environment.

Overview

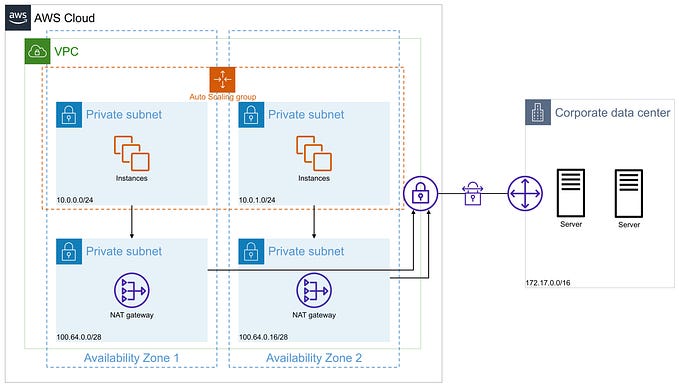

The source Oracle database runs on an on-premises environment, and the target database runs on Amazon RDS for Oracle. The source Oracle database exports a schema with content as METADATA_ONLY, and the target database imports the schema using Oracle Data Pump. AWS DMS is utilized to migrate existing data using the full-load migration type and replicate ongoing changes using the CDC migration type. Multiple AWS DMS tasks can be created with different DMS migration types and configurations to support the migration efficiently.

Prerequisites

Amazon RDS for Oracle is created to use as a target database, and network connectivity should be present between the source and target database. It’s recommended to configure a network using AWS Direct Connect or a VPN. For more information, visit Setting up a network for a replication instance.

Export Schema with CONTENT=METADATA_ONLY using Oracle Data Pump and upload it to Amazon S3 bucket. For Amazon S3 upload objects options, visit Uploading objects.

Ensure Amazon S3 integration is configured to transfer the dump files between Amazon RDS for Oracle and Amazon S3 bucket. For more information, visit Amazon S3 integration.

Review the following AWS DMS documentation to use an Oracle database as a source and target for AWS DMS to ensure applicable preconditions are fulfilled.

- For Oracle database as a source, visit Using an Oracle database as a source.

- For Oracle database as a target, visit Using an Oracle database as a target.

Here are the important requisites for AWS DMS:

- Verify that AWS DMS supports the source and target databases version.

- Make sure that ARCHIVELOG mode is on in the source Oracle database.

- Set up supplemental logging in the source Oracle database.

- Required user account privileges for source and target database.

Provision sufficient compute resources for AWS DMS Replication instance and Amazon RDS for Oracle. Perform a dry run by provisioning negligibly higher compute resources than anticipated and closely monitor resource utilization and resize as needed.

Ensure there’s no bandwidth throttling between the on-premises and AWS network link.

AWS DMS

Follow the below step while setting up the AWS DMS:

- Split the DMS tasks for tables that contain LOB data and do not contain LOB data.

- In the DMS task setting, use

CommitRateto transfer the maximum number of records together. By default, it’s 10000, and the maximum value is 50000. - In the DMS full-load task setting, use

MaxFullLoadSubTasksto load the maximum number of tables in parallel. By default, it’s 8, and the maximum value is 49. - Ensure

LobMaxSizeis configured to the maximum LOB size according to the source database columns. - Use

parallel-loadto optimize migration speed and performance. - For reading the redo logs for change data capture (CDC) for Oracle as a source, Binary Reader is recommended for better CDC performance.

- To start AWS DMS CDC tasks at a particular interval, use

CdcStartPositionorCdcStartTime.

For more information, visit Target metadata task settings.

Migrate Large Binary Objects (LOBs) Data

To migrate Large binary objects (LOBs), the AWS DMS task supports Full, Limited, and Inline LOB modes. Setting LOB mode is the crucial parameter as it engages an essential role in the migration time.

For AWS DMS full-load migration tasks, identify the tables and columns with LOB datatypes and use maximum LOB size per table to create separate LOB categorized tasks for LOB tables. E.g., a separate task for tables with a maximum LOB size of less than 100 KB, between 100 KB to 500 KB, between 500 KB to 750 KB MB, and so forth.

For AWS DMS CDC migration tasks, identify the tables with the parent-child relationship and group them in a single task. For the non-parent-child relationship table, create separate tasks as needed. You can configure the maximum LOB size according to the source database column with some buffer to avoid the LOB data truncate issue.

For the non-parent-child relationship table, create separate tasks as needed. You can configure the maximum LOB size according to the source database column with some buffer to avoid the LOB data truncate issue.

Creating separate tasks covering small LOB ranges will improve the migration speed.

Further, you can use Inline LOB mode to improve performance.

For more information, visit Setting LOB support for source databases in an AWS DMS task.

Before Full-load

- Create tablespaces, roles, and optionally, schema with

DBMS_METADATA GET_DDLfunction according to the source database and grant privileges in the target database. - Import a schema excluding

INDEXandCONSTRAINT. Optionally, you can only excludeINDEXbut disable foreign key constraints before the AWS DMS full-load. While performing the import job, make sure you use a log file to ensure there are no errors in import job.

Once the import job is completed successfully, you can start the AWS DMS full-load tasks.

Before CDC

- Import a schema including only

INDEXandCONSTRAINT. While performing the import job, make sure you use a log file to ensure there are no errors in import job. - Once the import job is completed successfully, you can recompile the schema and start AWS DMS CDC tasks.

Keep AWS DMS CDC tasks running to replicate ongoing changes until the cut-over window. Also, AWS DMS tasks give the flexibility to stop and resume if needed.

Data Validation

Data validation is important for any migration to ensure that the data is migrated accurately from the source database to the target database.

AWS DMS provides data validation functionality where it compares each data between the source and target databases. For more information, visit AWS DMS data validation.

Apart from that, you can prepare custom reconciliation scripts to compare the data against source and target databases for data validation. The scripts can be leveraged to verify the following details:

- Tables

- Functions

- Indexes

- Constraints

- Table column

- Row count

- Data reconciliation checksum for each column

Security

Use the following to perform the migration using security best practices:

- Enable AWS DMS Replication instance encryption with AWS KMS to keep storage data secure.

- Enable AWS DMS Endpoints encryption to keep endpoint data secure.

- Enable the AWS DMS Endpoints Secure Socket Layer (SSL) mode verify-ca with the AWS DMS CA certificate.

- Use a private network using AWS Direct Connect or a VPN to allow connection from AWS DMS to the source database.

- Enable Amazon RDS for Oracle encryption with AWS KMS.

- Enable secure connection for source Oracle database using the Oracle SSL option.

- Enable secure connection for Amazon RDS for Oracle using the Oracle SSL option in the RDS option group with the appropriate TLS version.

- Enable AWS DMS Endpoints encryption to keep endpoint data secure.

- Enable Amazon S3 bucket encryption.

- Use Amazon S3 VPC Endpoint to access the S3 bucket for Oracle Data Pump.

- User network ACLs and security groups allow connection in AWS DMS and Amazon RDS.

- Use least privilege IAM policies to control access to AWS resources.

For more information on Amazon RDS security, visit Security in Amazon RDS.

For more information on AWS DMS security, visit Security in AWS Database Migration Service.

Monitoring and Logging

Use the following to set up monitoring and logging for Amazon RDS and AWS DMS:

AWS Account

- AWS CloudTail: To enable operational and risk auditing, governance, and compliance of the AWS account. For more information, visit Working with CloudTrail.

Amazon VPC

- VPC Flow Logs: To capture information about the IP traffic going to and from network interfaces in Amazon VPC. For more information, visit Logging IP traffic using VPC Flow Logs.

AWS DMS

- Task Logging: Enable task logging during the migration process to debug replication tasks. For more information, visit Logging task settings.

- AWS DMS Events and Notifications: To receive a notification when the AWS DMS event occurs. For more information, visit Events and notifications in AWS DMS.

- Amazon CloudWatch: View the metrics for AWS DMS tasks and a replication instance. For more information, visit Monitoring replication tasks using Amazon CloudWatch.

Amazon RDS

- Amazon RDS Logs: Access database logs for auditing and troubleshooting. For more information, visit Oracle database log files.

- Amazon RDS Events: To know the changes in the Amazon RDS environment. For more information, visit Monitoring Amazon RDS events.

- Performance Insights: To analyze the database performance. For more information, visit Monitoring DB load with Performance Insights on Amazon RDS.

- Enhanced Monitoring: Monitor the operating system of your DB instance in real-time. For more information, visit Monitoring OS metrics with Enhanced Monitoring.

- Amazon CloudWatch: Use Amazon CloudWatch dashboards and alarms with near real-time Amazon RDS metrics. For more information, visit Monitoring Amazon RDS metrics with Amazon CloudWatch.

In this blog, you have learned the strategy to migrate Oracle Databases to Amazon RDS for Oracle using AWS DMS seamlessly and techniques to set up and configure AWS DMS to increase the migration speed and reduce the migration time with security considerations and appropriate logging and monitoring solutions for AWS DMS and Amazon RDS.

Another common solution is to perform full data import using Oracle Data Pump and use AWS CDC to replicate ongoing changes.

To get started, here’s the AWS CloudFormation template for AWS DMS for Oracle.

To learn more about AWS DMS best practices, visit Best practices for AWS Database Migration Service.

For more information on Oracle database migration to AWS, visit Migrate an on-premises Oracle database to Amazon RDS for Oracle and Migrating Oracle databases to the AWS Cloud.